Today, I'll summarize the first module of GCC Study.

In order to familiarize with the English, I will summarize it in English.

1. The Path to Modern Computers

The development of computing saw steady growth since the invention of the Analytical Engine but made a significant leap forward during World War II. Governments poured money into computing research during the war, leading to advancements in cryptography and the use of computers for processing secret messages. Alan Turing, a renowned computer scientist, played a crucial role in developing the top-secret Enigma machine during World War II. Post-war, companies like IBM and Hewlett-Packard advanced computing technologies in various sectors.

Technological advancements, including the shift from punch cards to magnetic tape, fueled the growth of computational power. Vacuum tubes and large machines were used in early computers, but the industry eventually transitioned to using transistors for more compact and efficient designs. The ENIAC, one of the earliest general purpose computers, had 17,000 vacuum tubes and occupied 1,800 square feet of floor space. Transistors replaced vacuum tubes, and the invention of the compiler by Admiral Grace Hopper marked a significant milestone. The industry saw the introduction of hard disk drives, microprocessors, and smaller computers. The Xerox Alto was the first computer with a graphical user interface, resembling modern computers. The emergence of personal computers like the Apple II and IBM's PC made computing more accessible to the average consumer.

With huge players in the market like Apple Macintosh and Microsoft Windows taking over the operating systems space, a programmer by the name of Richard Stallman started developing a free Unix-like operating system. Unix was an operating system developed by Ken Thompson and Dennis Ritchie, but it wasn't cheap and wasn't available to everyone. Stallman created an OS that he called GNU. It was meant to be free to use with similar functionality to Unix. Unlike Windows or Macintosh, GNU wasn't owned by a single company, its code was open source which meant that anyone could modify and share it. GNU didn't evolve into a full operating system, but it set a foundation for the formation of one of the largest open source operating system. Richard Stallman's development of the GNU operating system laid the foundation for Linux, a major player in open-source operating systems. The 1990s saw the introduction of PDAs(by nokia), leading to the rise of smartphones and mobile computing.

2. Digital Logic

Binary System: The communication that a computer uses, also known as a base-2 numeral system.

(8 bits = 1 byte)

Q. What does the following translate to?

01101000 01100101 01101100 01101100 01101111

A. hello

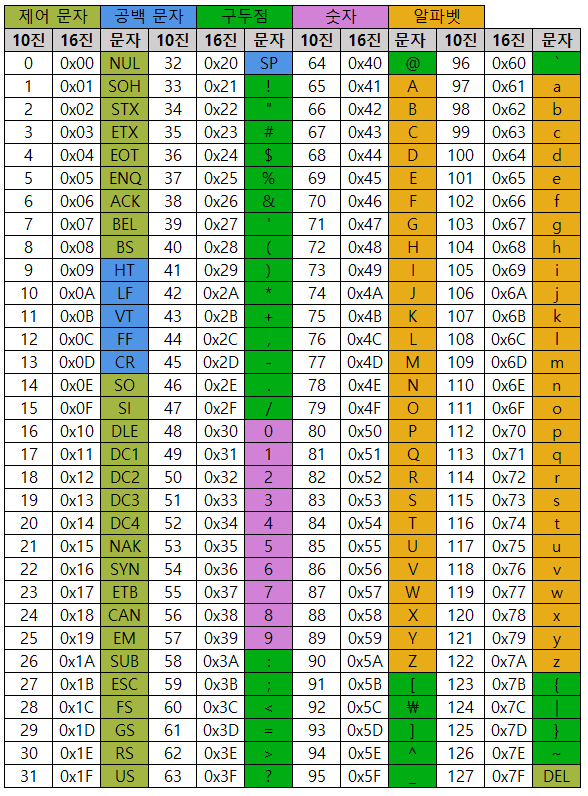

Character Encoding: Assigns our binary values to characters, so that we as humans can read them.

The Oldest character encoding standard used ASCII. It represents the English alphabet, digits, and punctuation marks.The great thing with ASCII was that we only needed to use 127 values out of our possible 256. It lasted for a very long time, but eventually it wasn't enough. Other character encoding standards recreated to represent different languages, different amounts of characters and more. Then came UTF-8. The most prevalent encoding standard used today. Along with having the same ASCII table, it also lets us use a variable number of bytes. Think of any emoji. It's not possible to make emojis with a single byte, so as we can only store one character in a byte, instead UTF 8 allows us to store a character in more than one byte, which means endless emoji fun. UTF 8 is built off the Unicode Standard.

Logic gates: Logic gates allow our transistors to do more complex tasks, like decide where to send electrical signals depending on logical conditions.

details about logic gates:

https://simple.wikipedia.org/wiki/Logic_gate

Logic gate - Simple English Wikipedia, the free encyclopedia

From Simple English Wikipedia, the free encyclopedia This article does not have any sources. You can help Wikipedia by finding good sources, and adding them. (February 2021) A logic gate is an electronic component that can be used to conduct electricity ba

simple.wikipedia.org

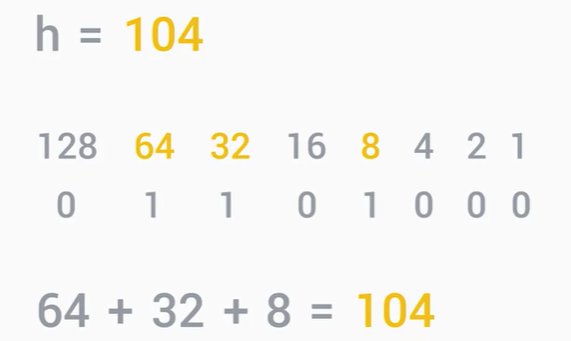

How to Count in Binary: Binary is the fundamental communication block of computers, but it's used to represent more than just text and images. It's used in many aspects of computing like computer networking or security. When we count binary, which only uses zero and one, we convert it to a system that we can understand, decimal. In the decimal system, there are 10 possible numbers you can use ranging from zero to nine. We use the decimal system to help us figure out what bits our computer can use. We can represent any number in existence just by using bits. Let's consider these numbers: 128, 64, 32, 16, 8, 4, 2, and 1. Hopefully, you'll see that each number is a double of the previous number going right to left. If you add them all up, you get 255. That's kind of weird. I thought we could have 256 values for a byte. Well, we do. The zero is counted as a value, so the maximum decimal number you can have is 255. If you add these numbers up, you'll get a decimal value. The letter h in binary is 01101000. Now, let's look at an ASCII to decimal table. The letter h in decimal is 104. Now, let's try our conversion chart again. 64 plus 32 plus 8 equals 104.

3. Computer Architecture Layer

Abstraction: To take a relatively complex system and simplify it for our use.

Thanks to abstractions, the average computer user doesn't have to worry about the technical details. One simple example of abstraction in an IT role that you might see a lot is an error message. We don't have to dig through someone else's code and find a bug. This has been abstracted out for us already in the form of an error message. A simple error message like file not found actually tells us a lot of information and saves us time to figure out a solution.

Computer Architecture Overview: A computer can be cut into four main layers, hardware, operating system, software, and users.

The hardware layer is made up of the physical components of a computer. These are objects you can physically hold in your hand. Laptops, phones, monitors, keyboards, you get the idea.

The operating system allows hardware to communicate with the system. Hardware is created by many different manufacturers. The operating system allows them to be used with our system, regardless of where it came from.

The software layer is how we as humans interact with our computers. When you use a computer, you're given a vast amount of software that you interact with, whether it's a mobile app, a web browser, a word processor, or the operating system itself.

The last layer may not seem like it's part of the system, but it's an essential layer of the computer architecture, the user. The user interacts with the computer and she can do more than that. She can operate, maintain, and even program the computer. The user layer is one of the most important layers we'll learn about. When you step into the field of IT, you may have your hands full with the technical aspects, but the most important part of IT is the human element. While we work with computers every day, it is the user interaction that makes up most of our job, from responding to user emails to fixing their computers.

That' all for the first module.

Make sure to have strong fundamentals.

'GCC-Study(完)' 카테고리의 다른 글

| [GCC-Study] Networking (1) (0) | 2024.02.17 |

|---|---|

| [GCC-Study] Operating System (2) (0) | 2024.02.12 |

| [GCC-Study] Operating System (1) (0) | 2024.02.11 |

| [GCC-Study] Hardware (2) (0) | 2024.02.05 |

| [GCC-Study] Hardware (1) (0) | 2024.02.04 |